GStreamer is a pipeline-based multimedia framework that links together a wide variety of media processing systems to complete complex workflows. It supports many media-handling components, including simple audio playback, audio and video playback, recording, streaming and editing. The pipeline design serves as a base to create many types of multimedia applications such as video editors, transcoders, streaming media broadcasters and media players.

It is designed to work on different operating systems, it is free and open-source software.

GStreamer processes media by connecting a number of processing elements into a pipeline. Each element is provided by a plug-in. Elements communicate by means of pads. A source pad on one element can be connected to a sink pad on another. When the pipeline is in the playing state, data buffers flow from the source pad to the sink pad. Pads negotiate the kind of data that will be sent using capabilities.

GStreamer is mainly used to create applications, but also comes with a set of command-line tools that allow to use it directly.

To go deeper into the framework, please read the tutorials from the hosting website.

How to install GStreamer

To install the framework, please follow the instructions for your operating system from the download page.

On Windows, at the time we are writing, the MinGW runtime installer is the suggested package to use (the current version is 1.16.2). It is available for both 32-bit and 64-bit architectures and better matches the documentation. The MSVC 64-bit (VS 2019) runtime installer is also available, but it doesn't seem to work as expected. GStreamer is a Linux-centric framework!

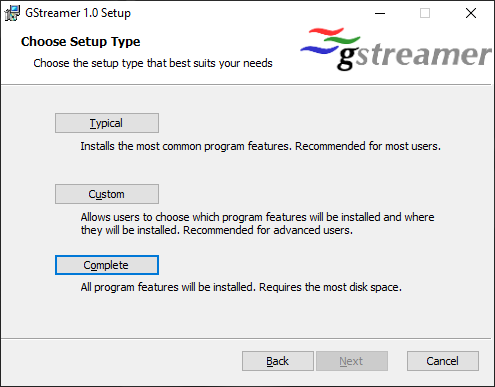

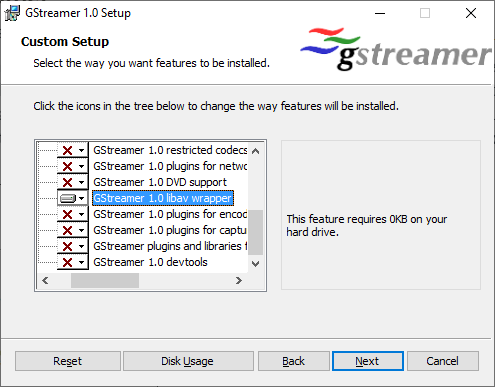

Tip: choose Complete installation; if you prefer Typical, then also add the libav wrapper.

Tip: add the bin folder to the system PATH, it will avoid a lot of frustration.

If you're on Linux or a BSD variant, you can install GStreamer using your package manager.

What are plug-ins?

GStreamer plug-ins are the building blocks to build the pipeline:

- source elements feed data into the pipeline

- filters transform or parse data

- sink elements consume data at the end of a pipeline branch

They are divided into three different packages, named:

- BASE

"a small and fixed set of plug-ins, covering a wide range of possible types of elements..."

- THE GOOD

"a set of plug-ins that we consider to have good quality code, correct functionality, our preferred license..." - THE UGLY

"a set of plug-ins that have good quality and correct functionality, but distributing them might pose problems..." - THE BAD

"a set of plug-ins that aren't up to par compared to the rest..."

How do I connect to CamON Live Streaming?

To quickly try GStreamer with CamON, it is possible to use the gst-launch-1.0 command-line tool, that builds and runs basic pipelines.

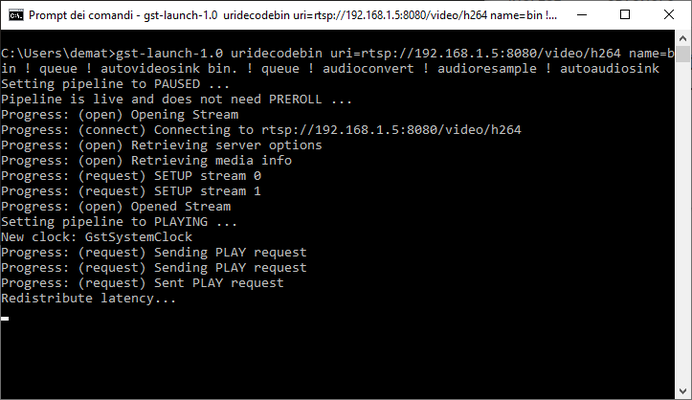

Assuming that the app is running on a device with IP address 192.168.1.5 and uses port 8080, we can run the following command:

gst-launch-1.0 uridecodebin uri=rtsp://192.168.1.5:8080/video/h264 name=bin ! queue ! autovideosink bin. ! queue ! audioconvert ! audioresample ! autoaudiosink

Here we are creating a pipeline with the following two branches (please check the syntax description for details):

- uridecodebin > queue > autovideosink

- uridecodebin > queue > audioconvert > audioresample > autoaudiosink

The used plug-ins implement the following actions:

-

uridecodebin

Decodes data from a URI into raw media. It selects a source element that can handle the given uri scheme and connects it to a decodebin; decodebin auto-magically constructs a decoding pipeline using available decoders and demuxers via auto-plugging.

Here the input URI is rtsp://192.168.1.5:8080/video/h264 and there will be two outputs of types video/x-raw and audio/x-raw. -

queue

Creates a new thread on the source pad to decouple the processing on sink and source pad. -

autovideosink

Is a video sink that automatically detects an appropriate video sink to use. -

audioconvert

Converts raw audio buffers between various possible formats.

Here it is used to convert the audio from mono to stereo. -

audioresample

Resamples raw audio buffers to different sample rates.

Here it converts the sample-rate from 48000 to 44100 Hz. -

autoaudiosink

Is an audio sink that automatically detects an appropriate audio sink to use.

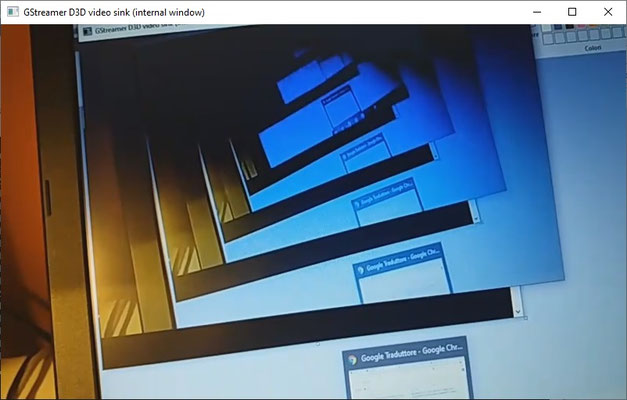

If everything works as expected, the video will show up and we'll be also able to listen for the audio.

More control in OBS

OBS has a plug-in, that allows to specify a pipeline as a source.

To make it working:

- install GStreamer

- add the bin folder to the system PATH

- place the obs-gstreamer.dll plug-in in the obs-plugins folder

- check the documentation for usage details

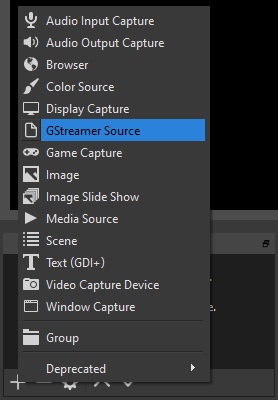

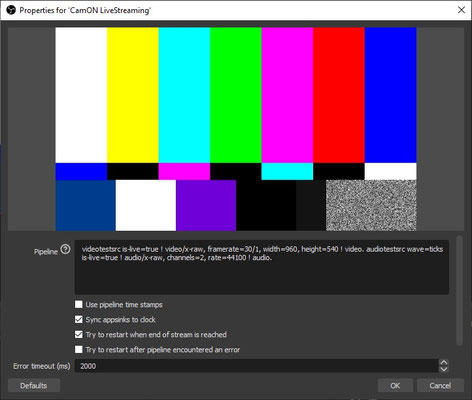

Now, when adding a new source, the menu entry GStreamer Source should be available: it will add a default pipeline to show a demo stream.

Great! We are now ready to change the pipeline so that we can see the stream from our app.

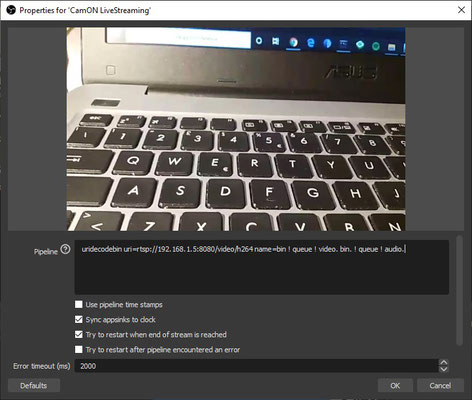

The plugin defines "video" and "audio" as the names to use for the media sinks. Named elements are referred to using their name followed by a dot.

We don't need to convert the audio since the OBS plug-in can handle the original format.

uridecodebin uri=rtsp://192.168.1.5:8080/video/h264 name=bin ! queue ! video. bin. ! queue ! audio.

With the GStreamer uridecodebin plug-in, it is possible to forcibly select the TCP transport (while the default transport is UDP), so that firewalls can be bypassed. To do this, specify rtspt as the protocol.

uridecodebin uri=rtspt://192.168.1.5:8080/video/h264 name=bin ! queue ! video. bin. ! queue ! audio.

This feature is not otherwise supported by OBS.